How One UT Robotics Lab Could Change Our World and Other Worlds, Too

Luis Sentis stands behind the desk in his fourth-floor office in the Woolrich Building, lunging to the right with an arm outstretched, then twisting back to his left, reaching for something on his desk. The performance is akin to a yoga pose, a dance, or the emotive physicality of an ancient marble statue. But Sentis, an associate professor in the Cockrell School’s Department of Aerospace Engineering, is actually demonstrating the future of robotics, and the kinds of technology that may soon make a manned mission to Mars, or extended stays on the moon, possible.

Specifically, he’s interested in the ways robots made to look and move like humans, and machines that enhance a human’s physical ability, are designed, controlled, and used.

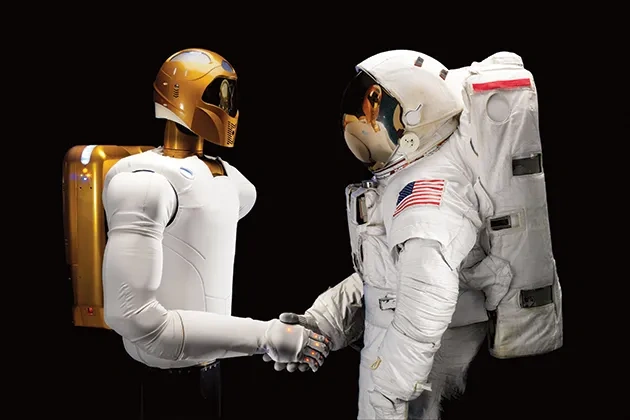

Two new grants, awarded this summer by the National Science Foundation and the Department of Defense are allowing Sentis’ Human Centered Robotics Group to explore new ways in which these machines might help. The group will work on humanoid machines designed to facilitate human exploration of Mars (think walking, talking sci-fi robots) and robotic exoskeletons (think mechanical suits) to give the wearer greater strength and endurance. They also provide a gleam of insight into the future of robotics, and the artificial intelligence that powers them.

Sentis’ team focuses on the ways seemingly sci-fi technologies might change the world for the better by working with, communicating with, and protecting humans. The group, made up of PhD students, has already established a pedigree. Actuators, moving parts designed by Sentis’ lab, are already a part of NASA’s Valkyrie robot. Along with engineering students, he built Dreamer, a friendly-looking bot intended to attract and engage with humans. Earlier this year, his group placed third in an international competition meant to test a robot’s domestic skills, like navigating and stocking a kitchen.

“A legged robot is nothing more than a robot with more degrees of freedom than others,” he says. A rover, the current standard of interplanetary exploration, is good for crawling over craggy Martian surfaces but has to execute a multi-point turn to change direction. A humanoid can twist, turn, lean, and reach.

“What I say is, ‘How can we make fiction a reality today?’ Especially in the sense of making it economically make sense.” More and more, labs like Sentis’ are helping life imitate art.

Take, for example, pop culture’s most well-known robots: C-3PO and R2-D2, the omnipresent duo of the Star Wars saga. They’re designed for different things. C-3PO, a shiny humanoid fluent in six million forms of communication, interacts with people in human environments. R2-D2, shaped like a wheeled trash bin, co-pilots spaceships. Two different tasks, two kind of robots. The robots being worked on by the Human Centered Robotics Group are of the C-3PO variety.

The habits currently being designed for Mars exploration are cluttered, Sentis says; “Not your average 5,000-square-foot house.” A rover—or R2-D2—would be useless in such an environment, bumping into things and getting stuck in small spaces. But a robot built to physically mimic a human is far more efficient. The humanoid robots designed during the four-year grant will be able to install solar panels, open hatches, drive rovers, and take on other simple tasks in a human environment, even once the humans arrive.

Robotic exoskeletons offer a similar challenge. The Department of Defense grant is meant to solve similar problems in designing wearable machines than can help soldiers carry heavy packs over long distances, or possibly in the future, help astronauts schlep over difficult terrain in diminished gravity. Eventually, these kinds of developments are intended to make their way into other fields, like health care, helping people with limited mobility due to accidents, illness, or age.

“The comfort of a human has to be part of the control process,” he says. More than anything, a robot should simply work well. “You’re not going to build a two-legged robot to go back and forth one mile on Mars. Because the rover will do it better.” But other projects, like preparing the environments humans might eventually reside in, requires a new set of robotic tools powered by artificial intelligence.

Robots that currently work side-by-side with humans in places like the International Space Station, Sentis says, are moving closer to humans, as opposed to bots that are “decoupled” from humans, like rovers. Robots that work closely with people must be productive, but in a way that doesn’t inhibit—or, ideally, that even facilitates—a human’s productivity. Crucially, they will explore the gap between giving a robot a command, say, “Pick up this cup,” and what the robot actually does: gauge the distance to the cup, reach for it, apply the right amount of pressure, and lift the cup. It’s a process Sentis calls low-level controller synthesis. And even when all these processes go the way they’re meant to, a robot may still fail to perform the function.

“And this is something other people fail to think about,” Sentis says with his characteristically lightning-fast cadence. “The robots are not the movement. The robots are the muscles themselves, the actuators.” Bridging the gap between the artificial intelligence that commands the machine and the discrete moving parts of a machine is a continuing challenge, and the goal of Sentis’ lab.

“Things in the future are going to change shape, reconfigure themselves. They’re going to attach to human bodies,” he says. “These are very complex problems. Exploring the impact of these machines is going to have an effect.”

Photo via Thinkstock